DVMNet: Computing Relative Pose for Unseen Objects Beyond Hypotheses

CVPR 2024

Abstract [Full Paper]

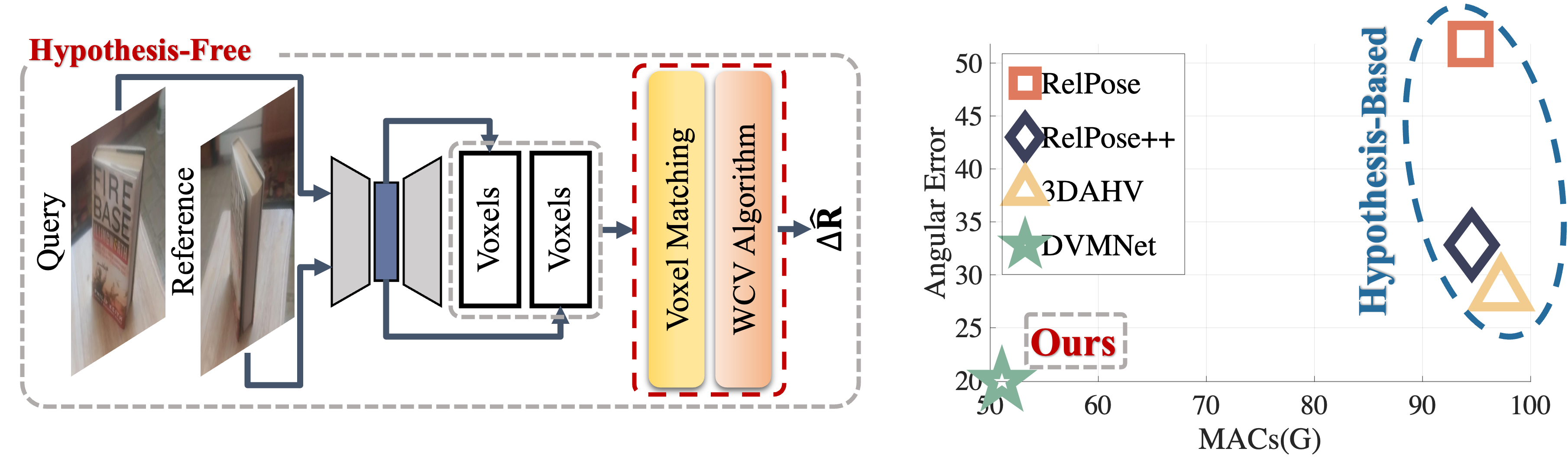

Determining the relative pose of an object between two images is pivotal to the success of generalizable object pose estimation. Existing approaches typically approximate the continuous pose representation with a large number of discrete pose hypotheses, which incurs a computationally expensive process of scoring each hypothesis at test time. By contrast, we present a Deep Voxel Matching Network (DVMNet) that eliminates the need for pose hypotheses and computes the relative object pose in a single pass. To this end, we map the two input RGB images, reference and query, to their respective voxelized 3D representations. We then pass the resulting voxels through a pose estimation module, where the voxels are aligned and the pose is computed in an end-to-end fashion by solving a least-squares problem. To enhance robustness, we introduce a weighted closest voxel algorithm capable of mitigating the impact of noisy voxels.

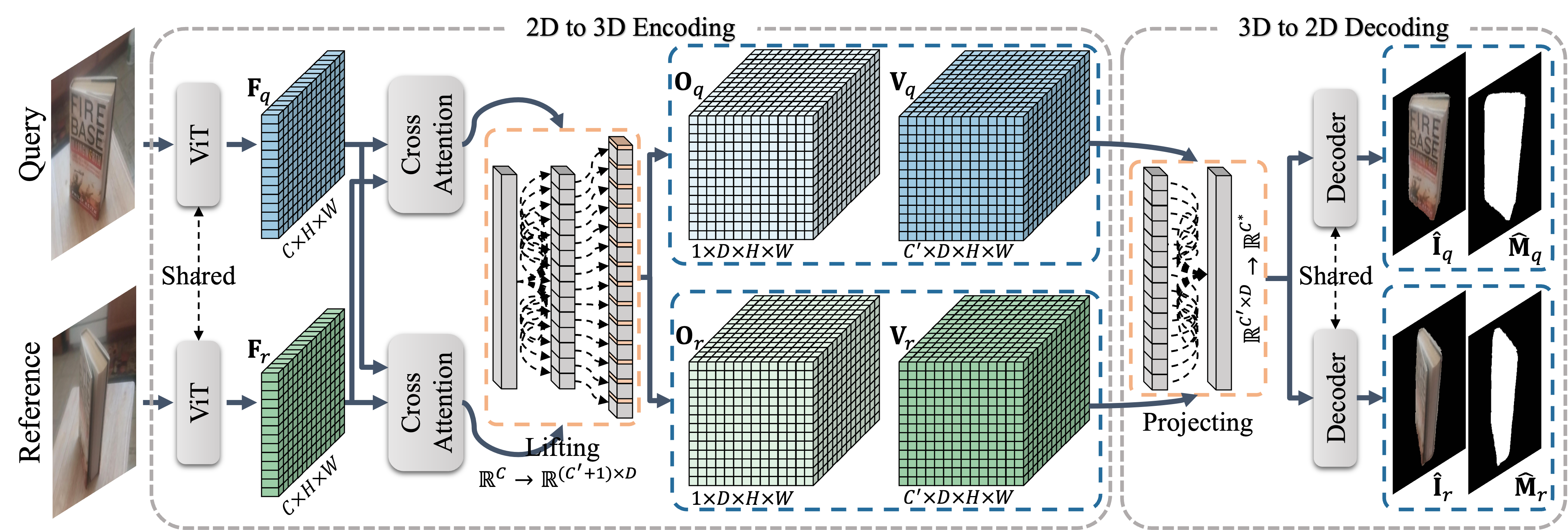

Method Overview

The encoder takes two RGB images, query and reference, as input and lifts their 2D feature embeddings to 3D voxels by leveraging cross-view 3D information. The decoder then reconstructs the masked object images from the voxels, allowing the voxels to encode the object patterns.

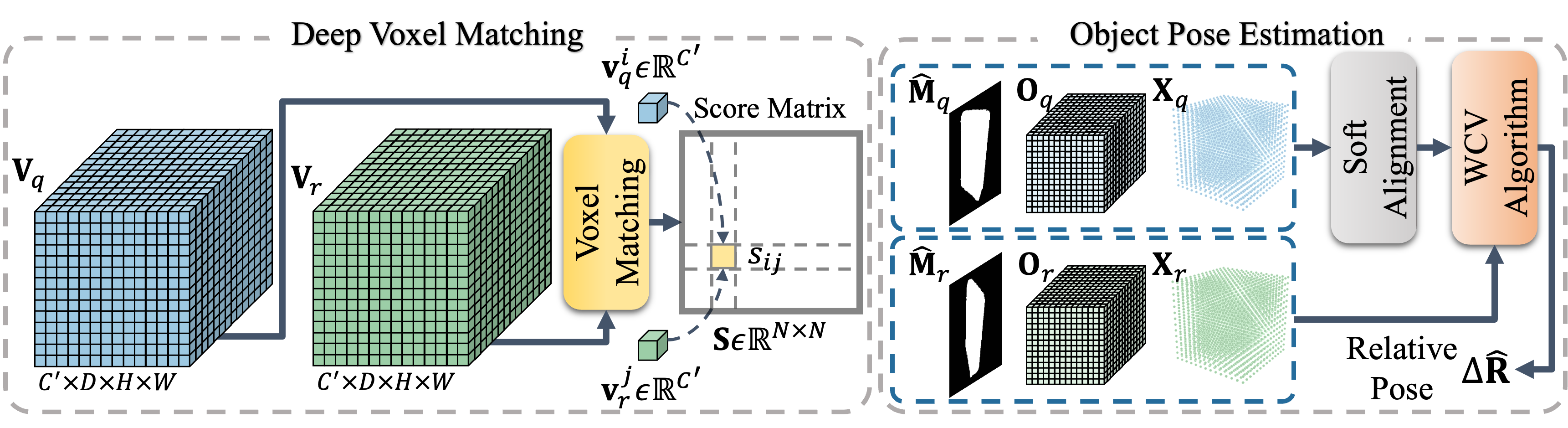

The feature similarities of $\mathbf{V}_q$ and $\mathbf{V}_r$ are computed, which results in a score matrix $\mathbf{S}$. A soft assignment is performed based on $\mathbf{S}$ over the query object mask $\hat{\mathbf{M}}_q$, the 3D objectness map $\mathbf{O}_q$, and the 3D coordinates $\mathbf{X}_q$. The aligned query and reference voxels are then fed into a Weighted Closest Voxel (WCV) algorithm that estimates the relative object pose in a robust and end-to-end manner.

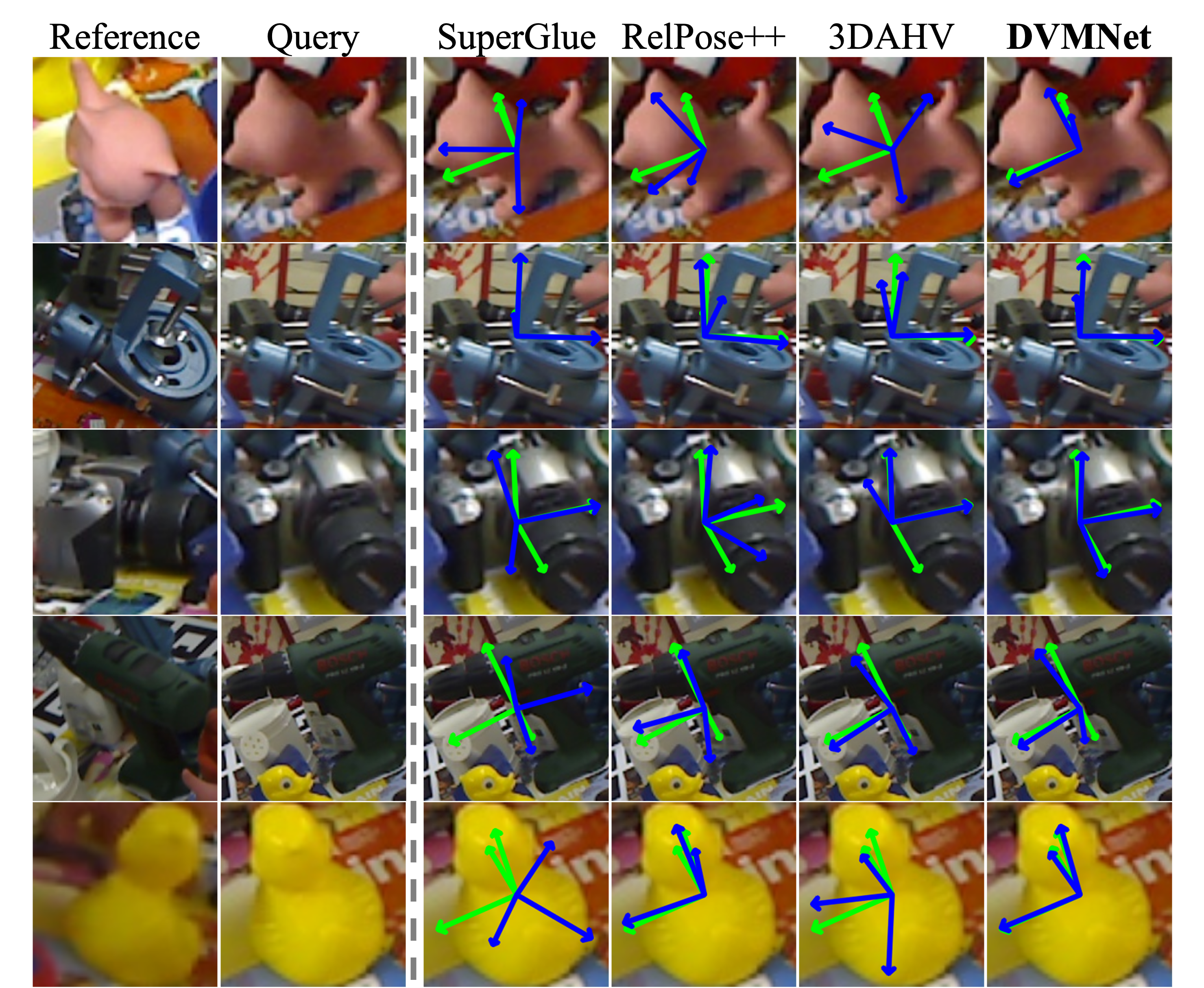

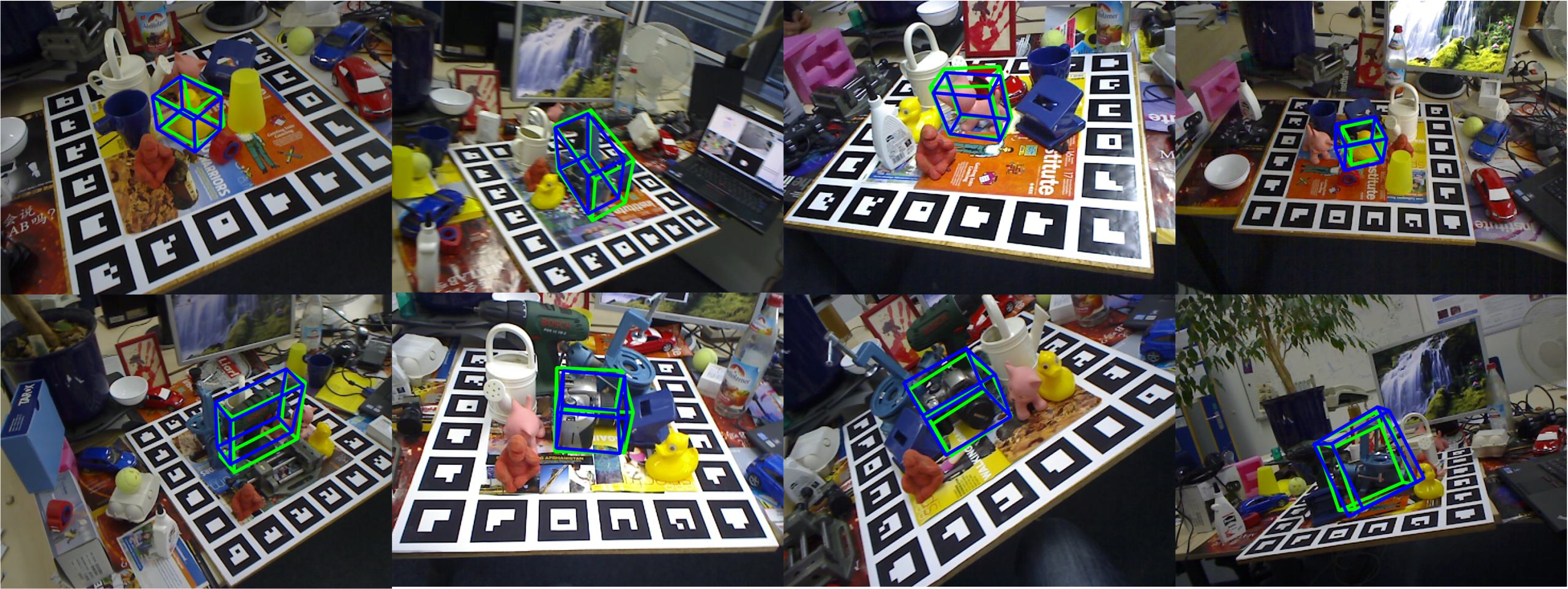

Results on Objaverse and LINEMOD

Citation

@article{zhao2024dvmnet,

title={DVMNet: Computing Relative Pose for Unseen Objects Beyond Hypotheses},

author={Zhao, Chen and Zhang, Tong and Dang, Zheng and Salzmann, Mathieu},

journal={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2024}

}

Contact

If you have any question, please contact Chen ZHAO at chen.zhao@epfl.ch.