Abstract

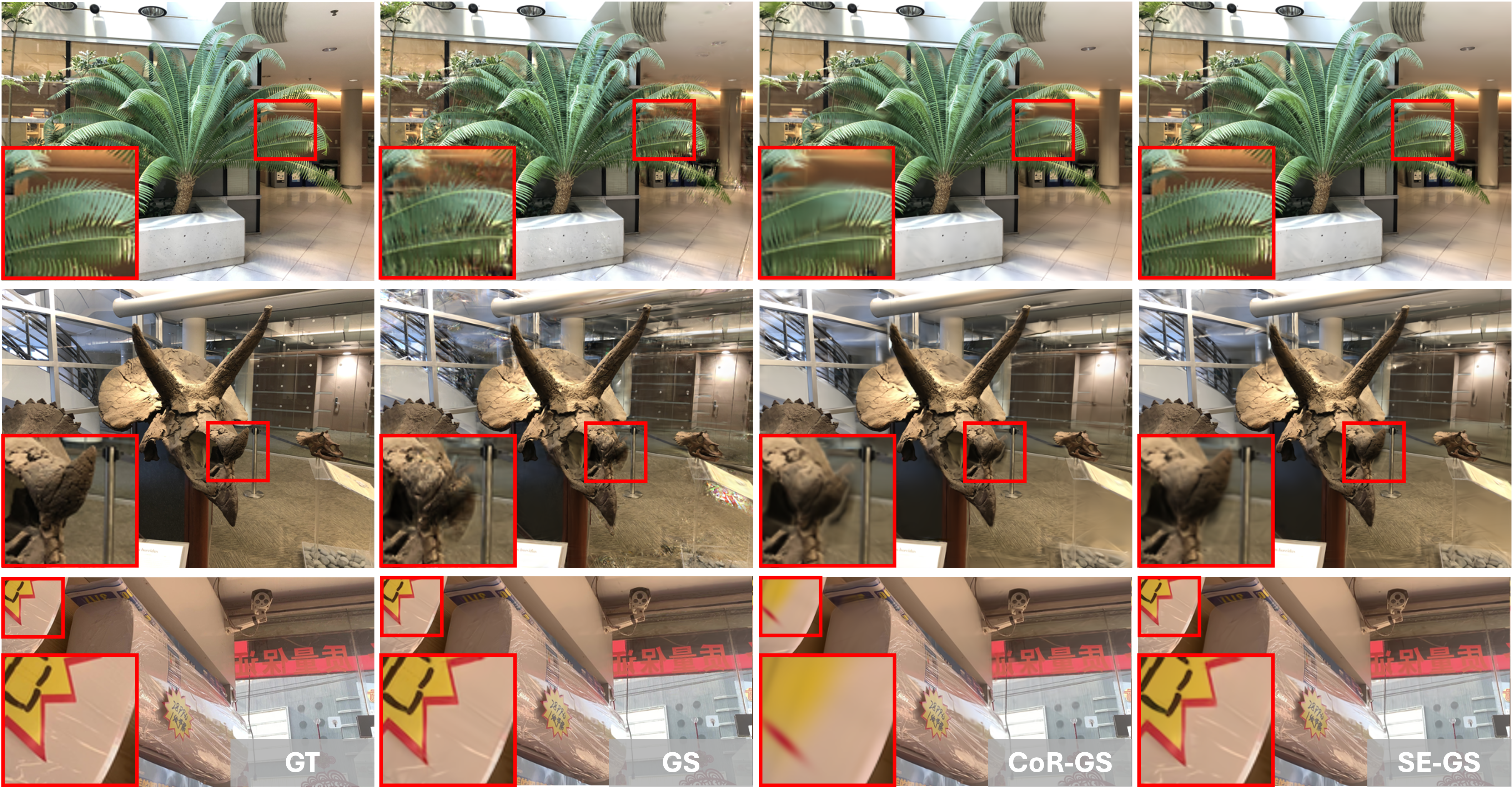

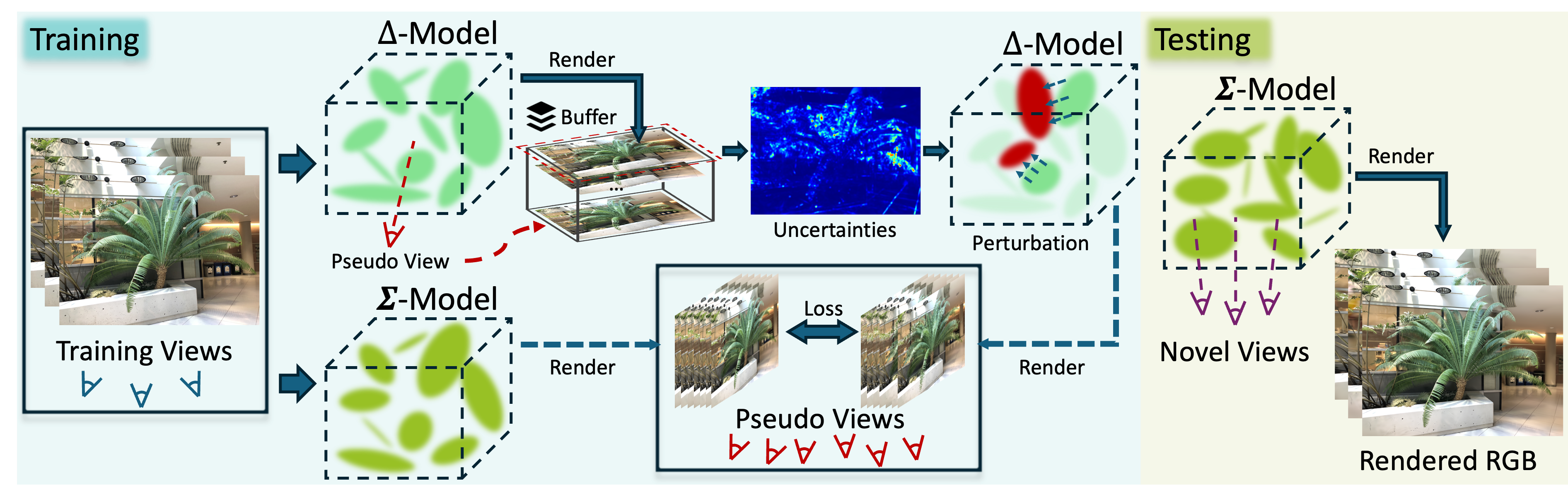

3D Gaussian Splatting (3DGS) has demonstrated remarkable effectiveness for novel view synthesis (NVS). However, the 3DGS model tends to overfit when trained with sparse posed views, limiting its generalization ability to novel views. We alleviate the overfitting problem, presenting a Self-Ensembling Gaussian Splatting (SE-GS) approach. Our method encompasses a Σ-model and a Δ-model. The Σ-model serves as an ensemble of 3DGS models that generates novel-view images during inference. We achieve the self-ensembling by introducing an uncertainty-aware perturbation strategy at the training state. We complement the Σ-model with the Δ-model, which is dynamically perturbed based on the uncertainties of novel-view renderings across different training steps. The perturbation yields diverse 3DGS models without additional training costs.

3D Gaussian Splatting (3DGS) has demonstrated remarkable effectiveness for novel view synthesis (NVS). However, the 3DGS model tends to overfit when trained with sparse posed views, limiting its generalization ability to novel views. We alleviate the overfitting problem, presenting a Self-Ensembling Gaussian Splatting (SE-GS) approach. Our method encompasses a Σ-model and a Δ-model. The Σ-model serves as an ensemble of 3DGS models that generates novel-view images during inference. We achieve the self-ensembling by introducing an uncertainty-aware perturbation strategy at the training state. We complement the Σ-model with the Δ-model, which is dynamically perturbed based on the uncertainties of novel-view renderings across different training steps. The perturbation yields diverse 3DGS models without additional training costs.

The perturbed models are derived from the Δ-model via an uncertainty-aware perturbation strategy. We store images rendered from pseudo views at different training steps in buffers, from which we compute pixel-level uncertainties. We then perturb the Gaussians overlapping the pixels with high uncertainties, as highlighted as red ellipses. Self-ensembling over the perturbed models is achieved by training a Σ-model with a regularization that penalizes the discrepancies of the Σ-model and the perturbed Δ-model. During inference, novel view synthesis is performed using the Σ-model.

The perturbed models are derived from the Δ-model via an uncertainty-aware perturbation strategy. We store images rendered from pseudo views at different training steps in buffers, from which we compute pixel-level uncertainties. We then perturb the Gaussians overlapping the pixels with high uncertainties, as highlighted as red ellipses. Self-ensembling over the perturbed models is achieved by training a Σ-model with a regularization that penalizes the discrepancies of the Σ-model and the perturbed Δ-model. During inference, novel view synthesis is performed using the Σ-model.